NEWS: After Jan. 6, Twitter Banned 70,000 Right-Wing Accounts. Lies Plummeted, by Will Oremus, published in The Washington Post (Available Here)

This story covers a newly released study that examines the impact of Twitter deplatforming of over 70,000 accounts associated with the right-wing QAnon movement, which were responsible for disseminating misinformation and provoking violence. The study finds that removing these accounts significantly reduced the sharing of links to “low credibility” websites. This, in turn, had an immediate and widespread impact on the overall spread of false information on Twitter, now known as X.

BOOK REVIEW: Why Lying On The Internet Keeps Working, A.W. Ohlheiser, published by Vox (Available Here)

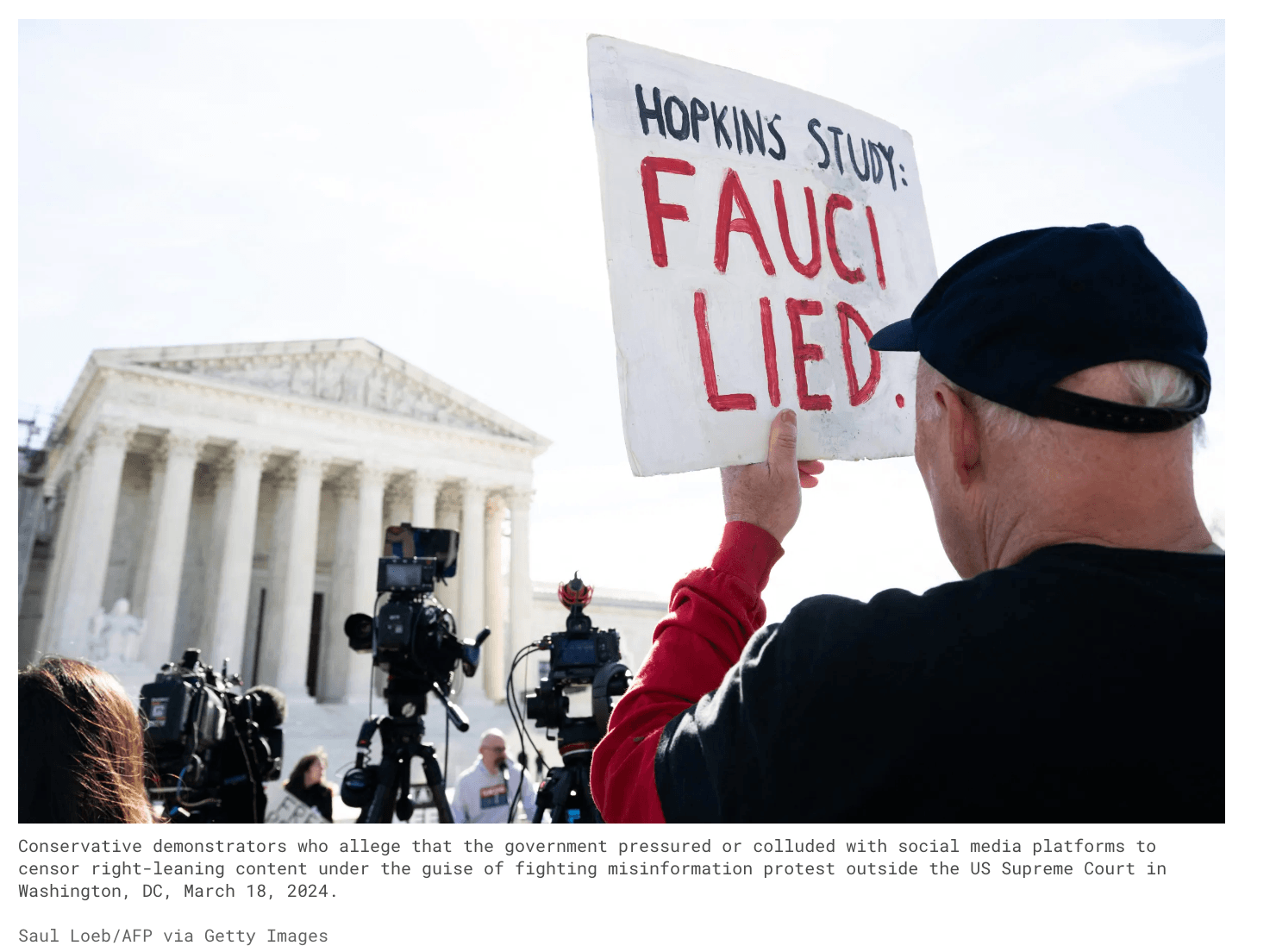

Vox’s senior technology reporter A.W. Ohlheiser reviews a new book, Invisible Rulers, by Stanford Internet Observatory researcher and disinformation expert Renee DiResta. The book analyzes the inner workings and dynamics between influencers, algorithms and online audiences and highlights how propagandists undermine belief in the legitimacy of democratic institutions. Ultimately, the author finds, the rise of disinformation is rewriting the relationship between people and government while destroying the legitimacy of democratically-elected rulers.

ANALYSIS: Reviewing New Science on Social Media, Misinformation, And Partisan Assortment, by Prithvi Iyer, published by Tech Policy Press (Available Here)

The Tech Policy Press reviews three recent studies focused on digital misinformation and online dynamics. The first study, published in the academic journal Science by researchers from MIT and UPenn, finds that exposure to headlines containing false information about the COVID-19 vaccines reduced vaccination intentions by 1.5 percentage points. The second study examined the impact of the most active misinformers on Twitter during the 2020 elections and found that a mere 0.3% of US registered voters included in the study were responsible for 80% of the fake news shared on Twitter. The third study conducted an experiment to analyze political behavior on Twitter and found that users were roughly 12 times more likely to block accounts from those with opposing political affiliations compared to accounts identifying with their political beliefs.